Queueing Theory - Part 3: Service policies

In queueing theory, service policies refer to the rules and methods governing how customers are served in a queue. These policies significantly impact system performance, including wait times, queue lengths, and overall customer satisfaction. Below are some common service policies:

First-In, First-Out (FIFO)

Customers are served in the order they arrive. This is the most straightforward policy and promotes fairness but can lead to longer wait times if a slow customer is at the front.

In software development, the First-In, First-Out (FIFO) approach can be likened to task scheduling in systems where processes are handled in the order they arrive, promoting simplicity but potentially causing delays if a resource-intensive task blocks subsequent ones. A real example of FIFO in software development is seen in web servers processing HTTP requests. For instance, if a server handles incoming requests sequentially, a time-consuming request (such as generating a large report) could delay all subsequent requests, affecting response times for users. This illustrates the trade-offs of FIFO in terms of fairness versus efficiency. Another example of FIFO in software development is seen in print queue management. Printers process documents in the order they are submitted, which is fair but can cause delays if a large or complex print job is queued first, making others wait unnecessarily. Similarly, in database transaction processing, a system might handle queries in the order they are received. If one query takes significantly longer (like a complex JOIN operation), subsequent simpler queries can face increased latency, potentially impacting application performance.

Last-In, First-Out (LIFO)

The last customer to arrive is the first to be served. This policy can be effective in environments like stack-based processing systems, where tasks are handled in reverse order of arrival. A common analogy is a pile of plates in a cafeteria: the last plate placed on the stack is the first one taken off for use. Similarly, in warehousing with pallet flow racks, items loaded last are accessed first, making LIFO efficient for products with short shelf lives or rapidly changing demand. In software, this is exemplified by function call stacks, where the most recent function is executed first during the return phase.

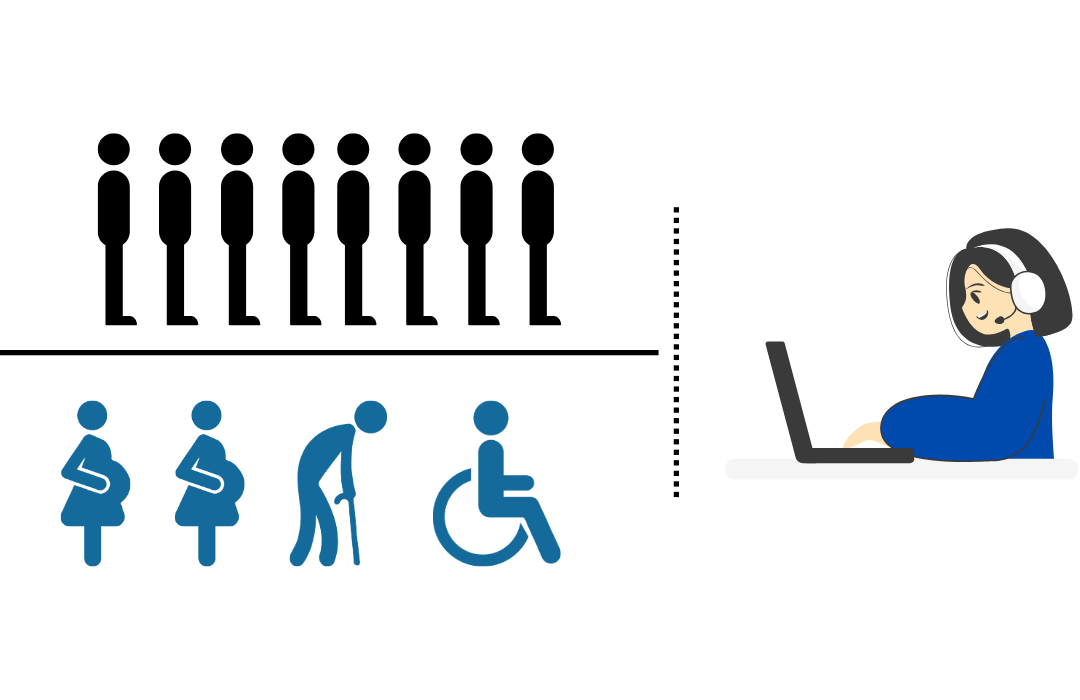

Priority Service

Customers are assigned different priority levels based on criteria such as urgency, status, or service type. Higher-priority customers are served before others, which can improve service for critical cases but may lead to dissatisfaction among lower-priority customers. This approach is commonly used in emergency rooms, where patients with life-threatening conditions are treated before those with minor injuries, regardless of arrival order. In software systems, priority queues are often implemented in scheduling algorithms, such as task schedulers in operating systems, where higher-priority processes like system-critical tasks are executed ahead of less important user processes. While efficient for handling critical workloads, this method can result in "starvation," where low-priority tasks are indefinitely delayed.

Round Robin

Each customer receives a fixed time slice or service duration before the next customer is served, with the process cycling through the queue repeatedly until all are served. This approach is commonly used in computer systems for process scheduling, ensuring fairness and preventing any single process from monopolizing system resources. For instance, in CPU scheduling, each process gets a time slot to execute, allowing multiple tasks to share the CPU efficiently. However, this can lead to delays for time-sensitive tasks if the time slice is too short or if the queue is too large.

Random Selection

Customers are selected for service at random, regardless of their arrival order or position in the queue. While this approach creates unpredictability, it can be effective in environments where fairness isn’t the primary concern or where it’s crucial to minimize the average wait time. For example, in lottery-based systems or load balancing for distributed servers, random selection helps distribute tasks more evenly and reduces bottlenecks. However, this method can lead to frustration for customers left waiting longer than expected.

Conclusion

Choosing an appropriate service policy is crucial for optimizing performance in queueing systems. Each policy has its advantages and disadvantages, depending on the context and objectives of the service. By carefully considering the service policies, organizations can enhance efficiency, reduce wait times, and improve customer satisfaction.

Published on November 21, 2024